Autonomous Racing

Simulated Multidirectional Training Racetrack

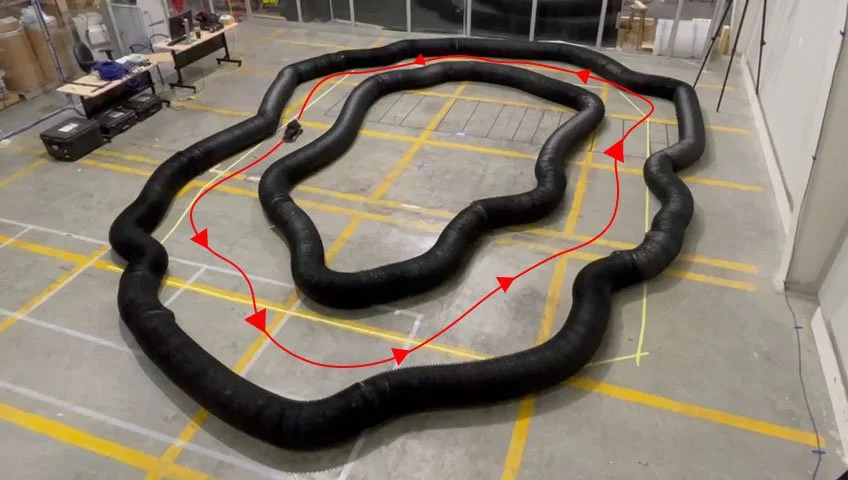

Time-Optimal Trajectory in the Multidirectional Training Racetrack

(a)

(b)

(c)

Zero-Shot Real-World Transfer to New Racetracks without Prior Maps

(a) Straight and simple corners. (b) Long cornering length. (c) Multiple cornering directions in quick succession.

Motorsport has historically driven automobile innovation by challenging the world's best car manufacturers to design, and develop vehicles that push the limits of contemporary technology, and compete at physical vehicle limits. Autonomous racing provides a similar avenue for development of high-speed autonomous driving algorithms in dynamic environments to expedite safe, and robust Autonomous Vehicle (AV) deployment. Conventional racing algorithms utilize optimization methods such as Model Predictive Control (MPC) and sampling methods such as Rapid Random Trees (RRT) that require prior knowledge of environment characteristics such as racetrack boundary coordinates, and obstacle vehicle positions extracted from a perception module, which increases computation complexity, and hinders high sampling rates for high speed applications.

End-to-end Artificial Intelligence (AI) models are comparatively computationally efficient, and do not require prior maps, enabling high autonomy that generalizes to new racetrack layouts, however, training such models is challenging due to the large amount of well curated training data required for supervised Deep Learning (DL) which is time consuming and labor intensive to collect and label, and challenges to bridging the simulation to reality gap using Deep Reinforcement Learning (DRL) to leverage accelerated training in simulation. A DRL method was developed [1], trained and evaluated using in-house simulation [2] and hardware [3] platforms developed using open-source tools, for zero-shot simulation to real-world policy transfer using a combination of high-fidelity sensor and actuator models, and a reward component to learn the difference between real-world and simulator physics.

Racing requires prediction and planning to execute control signals in sequence to maximize velocity at physical limits in highly nonlinear domains. The learned policy selected actions at these limits to navigate multiple turns of varying directions and radii in the handcrafted multidirectional training racetrack, conforming to an optimal racing trajectory that navigated to the apex of each corner to traverse the shorter inside region to minimize lap time, identical to professional racing drivers. The mixture of turning directions and lengths in the training environment prevented the model from overfitting to a specific layout to enable out-of-distribution generalization by providing an array of observation sequences in a constrained environment for learning of obstacle avoidance behavior at the maximum attainable velocity within the laws of physics, which required planning sequences of actions using detected object profiles, that facilitated dynamic obstacle avoidance. The DRL model computed control inputs using significantly fewer operations than conventional methods such as Nonlinear Model Predictive Control (NMPC) [4], and did not require prior maps or track boundary information. The policy learned in simulation without safety considerations for uncompromised performance was transferred zero-shot to the physical Autonomous Mobile Robot (AMR), with no additional training in the real-world, demonstrating generalization to new multidirectional racetracks, and out-of-distribution generalization to navigation in static and dynamic obstacle filled environments.

The DRL model utilized less computation resources for better racing performance compared to a modified wall following PID controller and MPC combined with Artificial Potential Function (APF), and lapped faster than non-expert humans with manual control. On average, the policy navigated the evaluated racetracks 30% faster than the tested methods, while utilizing the same CPU usage as the PID controller, and half that of the optimization method.

References

[1] S. Sivashangaran, A. Khairnar and A. Eskandarian, “Exploration Without Maps via Zero-Shot Out-of-Distribution Deep Reinforcement Learning,” arXiv preprint arXiv:2402.05066, Feb. 2024. (Link)

[2] S. Sivashangaran, A. Khairnar and A. Eskandarian, “AutoVRL: A High Fidelity Autonomous Ground Vehicle Simulator for Sim-to-Real Deep Reinforcement Learning," IFAC-PapersOnLine, vol. 56, no. 3, pp. 475-480, Dec. 2023. (Link) (Preprint)

[3] S. Sivashangaran and A. Eskandarian, “XTENTH-CAR: A Proportionally Scaled Experimental Vehicle Platform for Connected Autonomy and All-Terrain Research," Proceedings of the ASME 2023 International Mechanical Engineering Congress and Exposition. Volume 6: Dynamics, Vibration, and Control. New Orleans, LA, USA, Oct. 29–Nov. 2, 2023. V006T07A068. American Society of Mechanical Engineers. (Link) (Preprint)

[4] S. Sivashangaran, D. Patel and A. Eskandarian, “Nonlinear Model Predictive Control for Optimal Motion Planning in Autonomous Race Cars," IFAC-PapersOnLine, vol. 55, no. 37, pp. 645-650, Nov. 2022. (Link)